If AI has been in the news recently, it’s mainly through large language models (LLMs), which require substantial computing power for training or inference. Thus, it’s common to see developers using them via turnkey APIs, as we did in a previous article. But this is changing thanks to more open and efficient models and tools, which you can easily deploy on Clever Cloud.

Indeed, while our platform was designed for a variety of uses, we always paid particular attention to its flexibility and the freedom it gave our customers. This means they can easily exploit it to address new markets, with minimum effort spent on adaptation.

To confirm this, we recently tried to deploy open source AI models such as those derived from LLaMA and Mistral. Their particularity is that they exist in lightweight versions, which don’t necessarily require a GPU to run. As a result, they can be hosted as simple Clever Cloud applications, as long as they have been allocated enough memory.

Let’s ollama (with a web UI) !

To do it, we’ve chosen to use ollama, an MIT-licensed project that lets you download models from a registry, or even your own. It works as a server that communicates via an API, and is very easy to host on our infrastructures.

- Create a Clever Cloud account (and get 20 euros in credits)

To get started, you’ll need a Clever Cloud account. You can create the application and follow the next steps using our web interface, the Console, but also through our open source CLI: Clever Tools. That’s what we’ll describe below.

We’ll assume that your machine is running git and a recent version of Node.js. If you haven’t yet installed Clever Tools on your system, type (with system administrator rights, or sudo if necessary):

npm i -g clever-tools

clever loginOnce logged in, you can check that everything has gone smoothly with the following command:

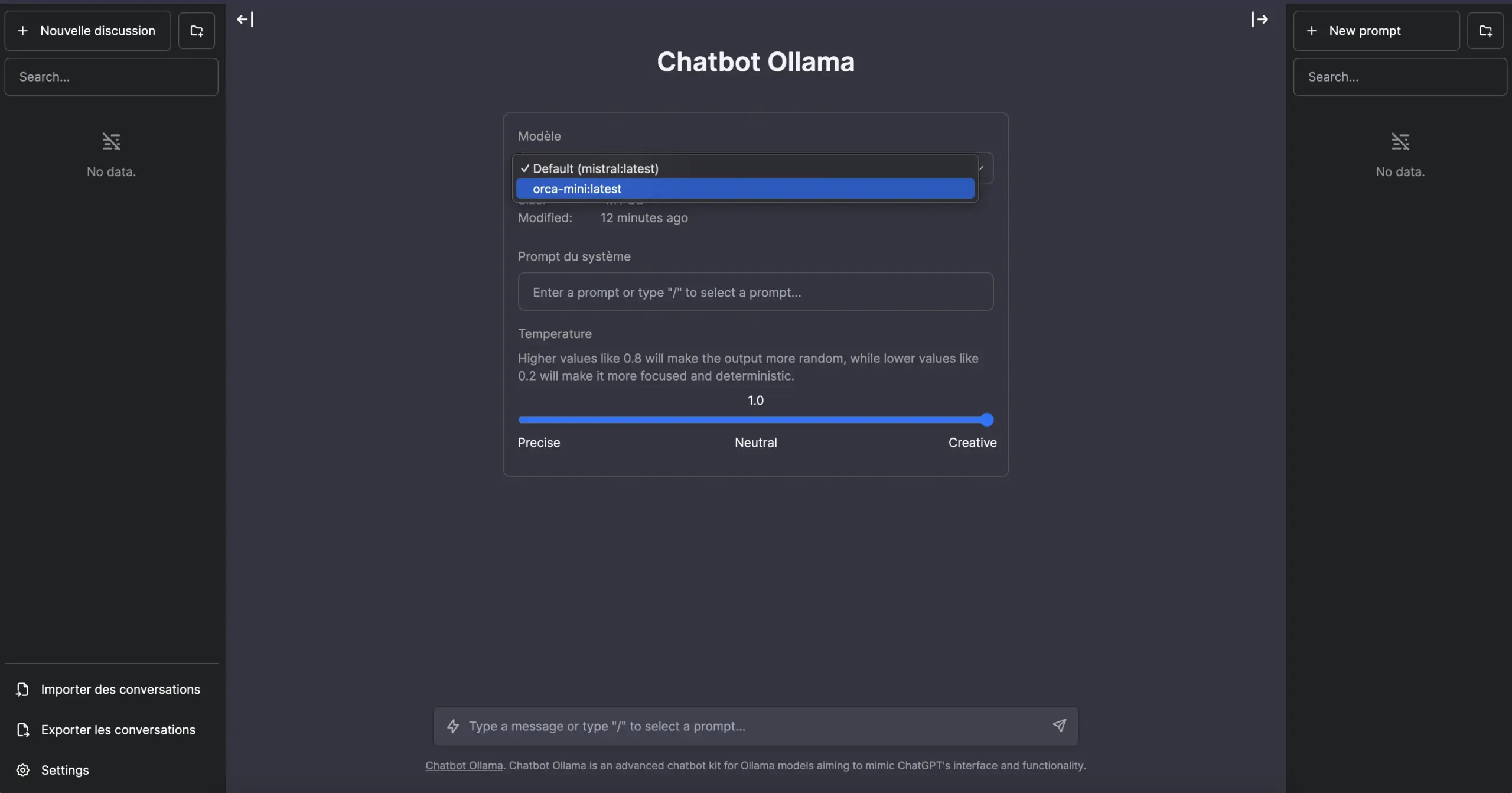

clever profileNext, we create a Clever Cloud application using Node.js. It will install ollama to download and run our models, adding a web interface (chatbot-ollama, also under MIT license) that will allow us to query the server from any browser, run from a computer or a simple smartphone.

This solution also offers local storage of chats in the browser. It therefore requires no database, but offers an import/export function. You can also choose between different models, set some of their parameters, configure system instructions, various prompts, and so on.

mkdir myollama && cd myollama

git init

curl -L https://github.com/ivanfioravanti/chatbot-ollama/archive/refs/heads/main.tar.gz | tar -xz --strip-components=1

clever create -t node myollama && clever scale --flavor L

clever env set CC_POST_BUILD_HOOK "npx next telemetry disable && npm run build"

clever env set CC_PRE_BUILD_HOOK "./ollama_setup.sh"

clever env set CC_PRE_RUN_HOOK "./ollama_start.sh"

echo "orca-mini" > models.list

echo "mistral" >> models.list

echo "codellama" >> models.listThe code above creates the application. We add a file containing the list of models we wish to fetch during startup. We’ve chosen an L instance, the minimum size for loading such models into memory. We also declare several environment variables for running scripts to install ollama, start the server and disable telemetry for the Next.js framework used to build the web interface after the dependencies have been installed.

Now add some scripts

Our application is almost ready. We still have to create two executable files:

touch ollama_setup.sh && chmod +x ollama_setup.sh

touch ollama_start.sh && chmod +x ollama_start.shollama_setup.sh download the ollama binary and the models from our list. Open it with your favorite editor and insert the following content:

#!/bin/bash

# We define the folder (in $PATH), where the ollama binary will be placed

BIN_DIR=${HOME}/.local/bin

mkdir -p ${BIN_DIR}

ollama_start() {

echo "Downloading and launching ollama..."

curl -Ls https://ollama.ai/download/ollama-linux-amd64 -o ${BIN_DIR}/ollama

chmod +x ${BIN_DIR}/ollama

ollama serve &> /dev/null &

echo -e "Finished: 3[32m✔3[0m\n"

}

get_models() {

MODELS_FILE="models.list"

MODELS_DEFAULT="orca-mini"

# We check if the models file exists

if [ ! -f ${MODELS_FILE} ]; then

echo "File ${MODELS_FILE} not found, ${MODELS_DEFAULT} used by default."

echo ${MODELS_DEFAULT} > ${MODELS_FILE}

fi

# Loop through each line and pull model

while IFS= read -r line; do

echo "Pulling ${line} model..."

ollama pull "${line}" > /dev/null 2>&1

echo -e "Finished: 3[32m✔3[0m\n"

done < ${MODELS_FILE}

}

# We start ollama, and wait for it to respond

ollama_start

if [ $? -ne 0 ]; then

echo -e "\nError during ollama setup."

exit 1

fi

count=0

MAX_TIME=10

while ! pgrep -x "ollama" > /dev/null; do

if [ ${count} -lt ${MAX_TIME} ]; then

sleep 1

count=$((count+1))

else

echo "Application 'ollama' did not launch within 10 seconds."

exit 1

fi

done

get_models

if [ $? -ne 0 ]; then

echo -e "\nError during models downloading."

exit 1

fi

This code may seem a little long, but that’s mainly because we’ve made it modular, logging only successful steps and any errors.

ollama_start.sh detects whether the ollama server is already present before starting the web interface. If it isn’t, we launch it in the background (we’ll see why below).

Open it with your favorite editor and insert the following content:

if ! pgrep -f "ollama" > /dev/null; then

# If 'ollama' is not running, start it in the background

ollama serve &

echo "Application 'ollama' started."

else

# If 'ollama' is already running

echo "'ollama' application is already running."

fi

Once these two files have been created, we can deploy the application:

git add . && git commit -m "Init application"

clever deploy

clever domainYou’ll then see the domain to access it (which you can customize).

Cache and quick (re)start

Using Clever Cloud not only means deploying such an application easily, with logs and monitoring, and being able to instantly change its horizontal and/or vertical scaling. It also means being able to turn it into an image ready for a fast redeployment.

In fact, every application we host is stored as an archive just before dependencies are installed. This means it can be stopped and restarted in this form, so we don’t have to re-download the models every time. To do this, simply specify the folders to be added to the archive:

clever env set CC_OVERRIDE_BUILDCACHE "/:../.ollama/:../.local/bin/"

clever restart --without-cache --followThe application will restart, but this time it will take the built web interface, ollama and models from its cache, as the CC_PRE_BUILD_HOOK and CC_PRE_BUILD_HOOK steps are not executed. This is one of the reasons why we need to make sure that we start the ollama server with CC_PRE_RUN_HOOK, but check that this hasn’t already been done in a deployment where it is used to download models before running them.

Once this step is complete, you can stop the application and restart it whenever you like – it’ll be much faster from now on. And you’ll only be charged for the time you use it, by the second. If you wish to modify the list of models to use, do so in the dedicated file. A git push will be enough to rebuild the new cache.

# Stop/Restart the application

clever stop

clever restart

# After a modification in models.list, git push to recreate the cache

git add models.list && git commit -m "New models"

clever deployYou’ll find the files you need for this project in this GitHub repository, with the environment variables ready to import (clever env import < .env).

And if you want to push things further, deploy other models and tools for your applications that require more power and/or GPUs, let us know!